Showing posts with label Guide. Show all posts

Showing posts with label Guide. Show all posts

Saturday, April 7, 2012

What Is SEO ?

2:56 AM

Google, Guide, SEO, SEO Beginners Guide, Tips

Whenever you enter a query in a search engine and hit 'enter' you get a list of web results that contain that query term. Users normally tend to visit websites that are at the top of this list as they perceive those to be more relevant to the query. If you have ever wondered why some of these websites rank better than the others then you must know that it is because of a powerful web marketing technique called Search Engine Optimization (SEO).

SEO is a technique which helps search engines find and rank your site higher than the millions of other sites in response to a search query. SEO thus helps you get traffic from search engines.

This SEO tutorial covers all the necessary information you need to know about Search Engine Optimization - what is it, how does it work and differences in the ranking criteria of major search engines.

1. How Search Engines Work

The first basic truth you need to know to learn SEO is that search engines are not humans. While this might be obvious for everybody, the differences between how humans and search engines view web pages aren't. Unlike humans, search engines are text-driven. Although technology advances rapidly, search engines are far from intelligent creatures that can feel the beauty of a cool design or enjoy the sounds and movement in movies. Instead, search engines crawl the Web, looking at particular site items (mainly text) to get an idea what a site is about. This brief explanation is not the most precise because as we will see next, search engines perform several activities in order to deliver search results – crawling, indexing, processing, calculating relevancy, and retrieving.

First, search engines crawl the Web to see what is there. This task is performed by a piece of software, called a crawler or a spider (or Googlebot, as is the case with Google). Spiders follow links from one page to another and index everything they find on their way. Having in mind the number of pages on the Web (over 20 billion), it is impossible for a spider to visit a site daily just to see if a new page has appeared or if an existing page has been modified, sometimes crawlers may not end up visiting your site for a month or two.

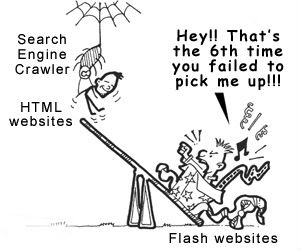

What you can do is to check what a crawler sees from your site. As already mentioned, crawlers are not humans and they do not see images, Flash movies, JavaScript, frames, password-protected pages and directories, so if you have tons of these on your site, you'd better run the Spider Simulator below to see if these goodies are viewable by the spider. If they are not viewable, they will not be spidered, not indexed, not processed, etc. - in a word they will be non-existent for search engines.

After a page is crawled, the next step is to index its content. The indexed page is stored in a giant database, from where it can later be retrieved. Essentially, the process of indexing is identifying the words and expressions that best describe the page and assigning the page to particular keywords. For a human it will not be possible to process such amounts of information but generally search engines deal just fine with this task. Sometimes they might not get the meaning of a page right but if you help them by optimizing it, it will be easier for them to classify your pages correctly and for you – to get higher rankings.

When a search request comes, the search engine processes it – i.e. it compares the search string in the search request with the indexed pages in the database. Since it is likely that more than one page (practically it is millions of pages) contains the search string, the search engine starts calculating the relevancy of each of the pages in its index with the search string.

There are various algorithms to calculate relevancy. Each of these algorithms has different relative weights for common factors like keyword density, links, or metatags. That is why different search engines give different search results pages for the same search string. What is more, it is a known fact that all major search engines, like Yahoo!, Google, Bing, etc. periodically change their algorithms and if you want to keep at the top, you also need to adapt your pages to the latest changes. This is one reason (the other is your competitors) to devote permanent efforts to SEO, if you'd like to be at the top.

The last step in search engines' activity is retrieving the results. Basically, it is nothing more than simply displaying them in the browser – i.e. the endless pages of search results that are sorted from the most relevant to the least relevant sites.

2. Differences Between the Major Search Engines

Although the basic principle of operation of all search engines is the same, the minor differences between them lead to major changes in results relevancy. For different search engines different factors are important. There were times, when SEO experts joked that the algorithms of Bing are intentionally made just the opposite of those of Google. While this might have a grain of truth, it is a matter a fact that the major search engines like different stuff and if you plan to conquer more than one of them, you need to optimize carefully.

There are many examples of the differences between search engines. For instance, for Yahoo! and Bing, on-page keyword factors are of primary importance, while for Google links are very, very important. Also, for Google sites are like wine – the older, the better, while Yahoo! generally has no expressed preference towards sites and domains with tradition (i.e. older ones). Thus you might need more time till your site gets mature to be admitted to the top in Google, than in Yahoo!.

Search Engine Optimization (SEO) Beginners Guide Part 4

2:33 AM

Google, Guide, SEO, SEO Beginners Guide, Tips

This is the fourth and last post in the series: Beginners Guide to Search Engine Optimization (SEO)

In this post we shall read about:

Search Engine Results Page, SERPs, Search Engine Submission, Website Submission, Search Terms, Sitemap, Spider, Title Tags, Three-Way Link Exchange, Unique Pageview, Unique Visitor, White Hat SEO, XML, XML Feed.

You might want to read these posts before proceeding:

About: Algorithm, Algorithmic Results, Alt Tag, Alt Text, analytics, Anchor Text, Backlink, Banned, Black Hat SEO, Blacklisted, Broken Link, Click-Through Rate, Cloaking, Conversion, Conversion Analytics, Conversion Rate, Crawler, Delisted, Directory, Doorway Page, Dynamic Content, Flash Optimization.

About: Gateway Page, Geographic Segmentation, Geographical Targeting, Gray Hat SEO, Hidden Text, Hit, html, Hyperlink, Inbound Links, Index, Keyword, Keyword Density, Link, Link Baiting, Link Exchange, Link Farm, Link Popularity, Link Text, Listings, Local Search, Meta Description Tag, Meta Keywords Tag, Meta Robots Tag, Meta Search Engine, Meta Tags.

About: Natural Search Engine Listings, Natural Search Engine Optimization, Optimization Services, Organic Listings, Organic Optimization, Outbound Links, Page Rank, Paid Inclusion, Paid Listings, Paid Placement, Pay-Per-Click, Position, PPC, PR, Query, Rank, Reciprocal Link Exchange, Results Page, Robot, robots.txt, search engine, Search Engine Marketing, Search Engine Optimization.

Search Engine Results Page (SERPs)

SERPs or Search Engine Results Pages, aka Results Pages are the dynamic webpages which display search results for a particular query. These pages contain links and a short description of the linked page, relevant to the search query.

Search Engine Submission/ Submission/ Website Submission

Search Engine Submission, known otherwise as Website Submission, Blog Submission or simply Submission, is the feature offered by most search engines which allows blog and website owners to submit their URLs in order to get considered for indexing the pages of the blog/website for inclusion in the search results. While everyone is free to submit new blogs and websites, the search engines don’t necessarily index all submitted sites.

Search Terms

Search Terms, Keywords or Queries are the phrases which constitute the data you provide the search engine with, using which the engine then lists links to relevant web pages. These terms are often mistyped or have more commonly used synonyms, which the search engine then suggests along with the results. Search Terms or Keywords are the heart of the entire Search Engine, since the entire foundation of data-mining or retrieving information based on input rests upon those words!

Sitemap

A sitemap is a webpage which contains links to some or all the pages of the website or blog, so that those pages can be easily found by both search engines and visitors. Search Engines often rely on Sitemaps in order to keep their index up-to-date.

Spider

A search engine spider, bot, robot or crawler is the script used by search engines to look for new and modified information on web pages. These spiders crawl webpages, moving to the next via links/ hyperlinks. The spiders of Google Search Engine are called Googlebots.

Title Tags

The title tag of a webpage is the phrase used to supply the search engines as well as normal visitors with a basic idea of what topic the page is about. The title tag is a piece of code in HTML, which is placed within the “head” section of the webpage.

Three-Way Link Exchange

Three way link exchanges are a system of creating links among three webpages or websites such that there is no reciprocal linking. Suppose Page ‘A’ contains a link to Page ‘B’, ‘B’ to ‘C’ and Page ‘C’ to Page ‘A’. This way, the search engines are expected to rank the linked pages higher without penalizing the pages for artificial/inorganic reciprocal linking. This technique is also used to create a closed loop of links so that new websites can be quickly indexed and will be ranked higher when linked to from a large number of websites through one another.

Unique Pageview

A unique pageview is the event where a web browser requests a ‘new’ webpage to be loaded, which hasn’t been accessed in a specified time period before now, upon instruction by a human or a computer program. Often the popularity of a website or blog is measured in terms of daily and monthly unique pageviews. The uniqueness is tracked using one or more of: cookies or pieces of code stored on the computer which accessed the page, tracking the IP address of the computer or other methods.

Unique Visitor

A unique visitor is said to have visited a website when one or more pages of the website are downloaded by a remote user, computer program or search engine bot, and that user agent hasn’t visited the website in a specified period of time before now. Unique visitors are most often tracked using cookies and IP addresses.

White Hat SEO

Originally known simply as Search Engine Optimization or SEO, the term was coined when certain webmasters started using unethical methods to gain unfair advantage over their competitors. The unethical brand is called Black Hat SEO, and the ethical and accepted brand is known as White Hat SEO.

XML

XML or Extensible Markup Language is a raw cousin of HTML. It is used to transfer and store text-based data and special tags for webpages. In recent times XML is playing a huge role in dynamic content websites, in the form of AJAX or Asynchronous Javascript And XML.

XML Feed

XML Feed is a document which contains the latest additions to one or more webpages, and is used to inform other scripts and sites about the updates. XML Feeds are used as sitemaps on most blogs and websites, to inform search engines about the latest pages and data.

Despite my best effort to include all the important terms in this series, I’m almost sure I missed out some of them. So, if you need help understanding certain terms related to SEO, feel free to post a comment below!

Friday, April 6, 2012

Search Engine Optimization (SEO) Beginners Guide Part 3

5:37 AM

Google, Guide, SEO, SEO Beginners Guide, Tips

This is the third post in the series: Beginners Guide to Search Engine Optimization (SEO)

Optimization Services, Organic Listings, Organic Optimization, Outbound Links, Page Rank, Paid Inclusion, Paid Listings, Paid Placement, Pay-Per-Click, Position, PPC, PR, Query, Rank, Reciprocal Link Exchange, Results Page, Robot, Robots.txt, Search Engine, Search Engine Marketing, search engine optimization, SEM, SEO

You might want to read these first :

SEO Beginners Guide Part 1

About: Algorithm, Algorithmic Results, Alt Tag, Alt Text, analytics, Anchor Text, Backlink, Banned, Black Hat SEO, Blacklisted, Broken Link, Click-Through Rate, Cloaking, Conversion, Conversion Analytics, Conversion Rate, Crawler, Delisted, Directory, Doorway Page, Dynamic Content, Flash Optimization

SEO Beginners Guide Part 2

About: Gateway Page, Geographic Segmentation, Geographical Targeting, Gray Hat SEO, Hidden Text, Hit, html, Hyperlink, Inbound Links, Index, Keyword, Keyword Density, Link, Link Baiting, Link Exchange, Link Farm, Link Popularity, Link Text, Listings, Local Search, Meta Description Tag, Meta Keywords Tag, Meta Robots Tag, Meta Search Engine, Meta Tags

Natural Search Engine Listings

Known to most people as simply search ranking, Natural Search Engine Listings are also referred to as Organic Search Listings. These search results are listed on the Search Engine Results Pages (SERPs) only because the Search Engine’s algorithm finds them most relevant to the search query, unlike the paid listings.Thus, theoretically you can only improve your website’s organic search ranking by doing Natural Search Engine Optimization.

Natural Search Engine Optimization

Commonly known as simply SEO, Natural Search Engine Optimization is the process of improving your organic search engine rankings by carrying out activities like keyword optimization, increasing the update frequency, building inbound links from other web pages, adding meta data etc. The result is increased search engine visitor traffic to your blog/website and more sales/subscriptions.

Optimization Services

Also known as SEO services, these are meant to help your website/blog attain a desired rank on the SERPs through services like link-building and keyword optimization with constant monitoring and analysis of results. While it is best to carry out your own SEO, huge websites and networks of blogs are difficult to maintain and optimize for search engines at the same time. Thus, SEO services are in great demand, since they are more experienced in the activities involved and assure results within a short time-frame.

Organic Listings (or Organic Optimization)

Same as Natural Search Engine Listings (or Natural Search Engine Optimization)

Outbound Links

The links from a given page to other web pages on the internet are referred to as outbound links. These outbound links may be directed at other websites (external outbound links) or pages on the same website (internal outbound links). Excessively large number of unrelated external outbound links cause suspicion (so to say) in the Search Engines’ algorithm, leading to penalization (Google PageRank is usually reduced) or even blacklisting from the Organic Search Listings.

Page Rank (or PR)

PageRank or Google PageRank is an algorithm designed to determine the value of a given webpage on the internet, by finding out the probability of a web surfer landing on the given page by following links from other pages. It was developed by Google founders Larry Page and Sergey Brin. Needless to say, this algorithm has been one of the most popular ways to determine the value of a website/webpage for several years. The algorithm is based on the principle that a person is most likely to land up on a webpage which has been linked to the most number of times from the most popular pages.

Paid Inclusion

Paid Inclusion in the context of Search Engines is the service a Search Engine offers to include a particular webpage or website in its search index. The ranking position of the page/site however, is subject to its relevance to the search query as in the case of any ordinary search listing. Most popular Search Engines like Google and Yahoo do not offer paid inclusion publicly, however, special purpose search engines, such as those specifically related to Engineering Products or Home Appliances charge a fee to include entries in their index.

Paid Listings

Paid listings are the search results included in the search index upon payment of a certain fee. Paid listings may simply mean inclusion in the index, or even paid positioning. These listings are usually displayed separately as advertisers’ links.

Paid Placement

Paid placement is the service offered by Search Engines to advertisers who wich to expose their ads to people looking for information on a specific topic. This service is offered by all major search engines like Google, Yahoo and Bing. unlike natural or organic search results, paid placement guarantees a high position in a prominent place based on bidding, and is almost independent of the search algorithm. That’s why people looking for immediate targeted traffic opt for paid placement instead of organic search optimization.

Pay-Per-Click (PPC)

Pay-per-click or Cost-per-click marketing or advertisement refers to the service offered by search engines where the advertiser pays a pre-determined fee when a search engine user clicks on the ad. While PPC or CPC marketing is still popular, other advertisement methods like PPV (Pay Per View) and CPA (Cost Per Action) have captured significant share of the market. CPC campaigns are often at risk of getting attacked by competitors, who hire people to click repeatedly on their ads to quickly drain the ad campaign funds.

Position

Search Engine Position or SERP rank is the position at which a particular webpage is displayed in the results pages of the search engine in response to a particular query from the user. Naturally, all website and blog owners fight ferociously to gain the top spots in the Search Engine Results Pages (SERPs). Businesses spend thousands and even millions of dollars to gain the top spots in order to gain more customers than their rival(s).

Query

A query or keyword is the word(s) entered by the search engine user, using which relevant links are displayed to related websites and blogs, images and videos etc. Once the user enters the query, the search engine fetches the most authoritative webpage links and related information, ordering them in descending order of relevance and authority in the SERP or search engine results page.

Rank

Same as SERP position, rank refers to the position of a particular webpage in the Search Engine results. It is basically a function of how important the search engine considers the webpage, in the context of the entered query/keyword. SERP rank or Search Engine Rank can be improved by creating frequent, unique and keyword optimized content, apart from building high quality relevant links.

Reciprocal Link Exchange

Reciprocal link exchange refers to the practice of creating mutual hyperlinks between webpages belonging to different websites, so as to gain more authority in the search engines, thus getting ranked higher and getting more visitor traffic.

Results Page

A Search Engine Results Page or SERP is the dynamic webpage which contains links and/or short description of various pages linked to the keywords/queries entered byt he search engine users. These pages are created dynamically by fetching information from the search index, and ordered using a specific algorithm of the Search Engine.

Robot

A robot, bot, crawler or spider is the active part of a search engine, the program or script that actually crawls websites across the WWW gathering new and updated information and updating the search engine’s index. Most search engines have robots, since it is the fastest way to create and update their index. Robots follow links from one webpage to another, and are often controlled by the Algorithm of the search engine, which decides the importance of specific webpages/websites based on factors like inbound/outbound links, keyword density, meta information, frequency of updates etc.

Robots.txt

Robots.txt is the universally accepted filename which contains the information a particular website/blog’s owner wants to provide the search engines with, so that the search index and results are accordingly modified. Some website/blog owners block either a few pages or the entire blog/website, in case they want to keep the information private. Others instruct the search engine robots/bots/crawlers to keep coming back at specific intervals for new information. Some webmasters provide URLs to the website associated information like Feed URL, Sitemap URL etc. This file is supposed to be located at “http://mywebsite.com/robots.txt” for the search engine spiders/bots to find the contained information.

Search Engine

Search Engine is the term used to describe either a few of or the entire array of datacenters (servers), the program, algorithm or script which crawls the World Wide Web (WWW) or the websites and blogs on the internet, looking for information to create an index, from which search results about a particular topic/keyword are retrieved upon querying by the internet users. A search engine is one of the most integral elements of the WWW, since one can’t possibly hope to find the best/latest information about a topic by manually browsing through hundreds of millions of websites and blogs! Some of the most popular general search engines today are Google, Yahoo, Bing (MSN) and Ask. There are other special search engines like WolframAlpha which are more semantic, and even solve complex mathematical problems! Specialized search engines are also created and widely used, for people looking for industrial products, scientific papers, patents etc.

Search Engine Marketing (SEM)

Search Engine Marketing is a complex term, which includes all forms of marketing: Paid and Organic; through search engines. SEM usually refers to SEO combined with PPC marketing for paid listings.

Search Engine Optimization (SEO)

SEO or Search Engine Optimization is the process of optimizing or modifying your website/blog so that the Search Engines would give the maximum possible authority for the same content. Usually SEO involves simple activities like linking to your blog/website from other blogs, sites and forums; increasing the related keyword density without making the content illegible, updating your content regularly etc. Unfortunately, in recent years SEO has taken precedence over creating unique and high quality content in terms of funds and time allocated.

Search Engine Optimization (SEO) Beginners Guide Part 2

5:04 AM

Google, Guide, SEO, SEO Beginners Guide, Tips

This is the second post in the Search Engine Optimization SEO Terms Explanation series. To read the first post dealing with terms like Algorithm, Alt Tag, Analytics, Anchor Text, Backlink, Black Hat SEO, Broken Link, Doorway Page, Dynamic Content, Flash Optimization etc. please go to: SEO Beginners Guide Part 1

In this post we shall learn about SEO terms like Geo-targeting, Gray Hat SEO, Keyword Density, Link Popularity and Meta Tags.

Gateway Page

A gateway page is identical to a doorway page, and is primarily used to funnel search engine or paid traffic to another website or landing page. These gateway pages have little useful content and are often de-indexed by search engines when discovered.

Geographical Targeting

Geographical targeting or Geo-Targeting refers to the process of focusing your efforts on getting visitors from a specific continent, country, state or even city, using Search Engine Webmaster Tools for those search engines which offer this service (Google does!). By using a country domain extension like .us, .ca or .in you are already informing the search engines that your website or blog is primarily of relevance to people from the same region. Maintaining a high keyword density of the name of the geographic region is another way to target region specific search engine traffic.

Geographic Segmentation

Geographic segmentation refers to analytics specific to geo-targeting, and segments the visitor traffic based on the physical location of the visitor, typically using the IP address of the computer. While this is very useful for local businesses and region-specific information websites, the IP addresses registered in a different location from the visitor’s computer may mess up the statistics. For example, someone may be using an internet connection with an IP registered by the ISP or internet service provider in another state or even country!

Gray Hat SEO

SEO practices which are neither ethical nor completely unethical, i.e. fall neither under White Hat SEO nor under Black Hat SEO, are referred to as Gray Hat SEO. These techniques include creation of Gateway or Doorway sites, re-publishing syndicated and duplicate content etc. In certain cases sites using Gray Hat SEO are blacklisted by the Search Engines, while in others they are not even detected.

Hidden Text

A very simple form of Black Hat SEO to increase keyword density and to add extra keywords, especially misspelled words, is to add text with the same font color as the background color. In some cases several title and comment tags in HTML are used for the same purpose. Usually search engines severely penalize and even blacklist sites using Hidden Text.

Hit

A hit is technically an HTTP request to a web server. So, for an HTML webpage with 1 CSS file, 3 images and 2 JavaScript files, there will be 1+3+2+1(the HTML page) hits per page view. Clearly hits are not an accurate measurement of a website’s visitor traffic.

HTML

HTML stands for Hyper Text Markup Language. It is the most common programming language used for creating web pages. It provides the directions or markup for web browsers about how to interpret the information contained in the file which then displays it to the user. HTML 5 is the latest version, which is still under development. With HTML 5, the data structure would be so advanced that no other codes will be required for videos and external device operation!

Hyperlink

A hyperlink is the link between two web pages connected using HTML. A Hyperlink is the address of the destination file specified using the IP address or a domain based URL. Hyperlinks are used for connecting pages on the same or different websites, hosted on the internet or a local intranet server. A search engine usually gives huge importance to relevant links from pages considered important by it. Google uses the PageRank algorithm to calculate the value of hyperlinks and the linked web pages.

Index

An index in the SEO context is the database of a Search Engine, with links to all the crawled web pages and information about what those pages are about. The search engine uses an algorithm to fetch pages relevant to the search term or query in a ranked list format. Search Engines generally use web crawlers, bots or spiders to go from one web page to another through links. Popular Search Engines like Google have billions and billions of web pages in their search index which is updated every few days. The index is usually spread over thousands of data centers across the globe to maximize storage capacity and minimize the time taken to return results based on the queries made by search engine users.

Inbound Links

Links or hyperlinks from other pages pointing to your web page are referred to as inbound links. These links have varying degrees of importance for different search engines. For example, Google uses a ranking algorithm called PageRank to calculate the value of each link, and accordingly all indexed web pages are assigned scores based on the number and quality of inbound links. For the purpose of PageRank calculation, usually only “dofollow” inbound links are considered important. For SEO, large numbers of highly popular and relevant inbound links are considered very important. The anchor text used for those links play a significant role in Search Engine Results Page Rankings.

Keyword

A keyword is a group of one or more words which are considered to be relevant to the given topic. Keywords are used by search engines to find out how relevant a given web page is to the search query made by the search engine user. While creating websites and blogs, it is very important to include as many related keywords as possible to rank high in the search results.

Keyword Density

Keyword Density is the density of a particular keyword or keyphrase in the given content. It is expressed as the percentage of words on a given web page which are identical to the keyword. Keyword density is one of the most important factors of Search Engine Optimization or SEO. The generally accepted thumb rule is that a keyword density of 2% to 4% is very good for most Search Engines.

Link

A hyperlink is the link between two web pages connected using HTML. A Hyperlink is the address of the destination file specified using the IP address or a domain based URL. Hyperlinks are used for connecting pages on the same or different websites, hosted on the internet or a local intranet server. A search engine usually gives huge importance to relevant links from pages considered important by it. Google uses the PageRank algorithm to calculate the value of hyperlinks and the linked web pages.

Link Baiting

Link baiting is a white hat technique to gain relevant backlinks for SEO. The most common form of link baiting is the creation of highly useful and popular content which others will want to link to from their blogs and websites. Sites like Wikipedia.org have millions of pages with comprehensive information about a wide range of topics in several languages. Thus, people naturally link to those pages, treating them as references. There are many such popular blogs and websites on different topics or niches, which gain high page ranks due to thousands of backlinks to their internal pages. The most popular posts which get linked to are lists like Top 10, Top 25 etc.

Link Exchange

Link exchange refers to a mutual reciprocation of linking to webpages in order to increase inbound links from relevant pages. Usually link exchange is considered ethical as long as both the pages are related. However, buying and selling links or linking to many unrelated pages may lead to blacklisting of a blog or website.

Link Farm

A link farm is a black hat SEO property, i.e. a webpage or several webpages linked to each other with the sole purpose of creating a closed network of links to gain high search ranking. When discovered, link farms usually get blacklisted or de-indexed by the search engines. Most link farms do not contain any unique or useful content, and are solely intended to create networks which can be sold off to others.

Link Popularity

Link popularity refers to the number and quality of inbound links to a webpage or website and the relevance of the linking pages to the linked pages. As far as Google is concerned, search engine rankings are only improved when the pages linking to each other are related and the anchor text has contains one or more of the searched keywords. High PageRank inbound links are considered an essential part of SEO for Google.

Link Text

Link text is more popularly known as anchor text. It is the text which is used to link to another web page using HTML. Link text or anchor text tells the search engines what keywords are relevant to the linked page. Thus, the search ranking of the linked page would depend not only on the pagerank of the linking webpage but also on the anchor text used.

Listings

Listings in SEO parlance are the ranked lists of indexed web pages, sorted according to the Search Engine’s algorithm. The pages on which these listings are displayed are referred to as SERPs r Search Engine Result Pages.

Local Search

Local Search is a relatively recent concept in Search Engines, having come to the spotlight after non-internet based businesses started marketing their products and services through internet based advertising. Local search is very useful for finding out about hotels, geographically proximal shops etc. Geo-targeting is aimed at improving the search ranking of a webpage or website for local searches.

Meta Description Tag

Meta description tag, often referred to as simple description tag, is the HTML code that gives search engines information about the web page. It is not visible to the visitors, and is included in the “head” section of the web page. Currently, search engines either display the default Meta description tag in the search results along with the link, or select portions of the actual webpage content based on the search query.

Meta Keywords Tag

Meta Keywords tag is the HTML code which supplies information about web page in short phrases, thus helping the user agents like certain search engine crawlers to categorize the web page based on keywords. As opposed to the meta description tag, meta keyword tag is no longer considered useful for Google, though it is still used by other search engines.

Meta Robots Tag

Meta Robots tag is the HTML code which suggests some search engine crawlers how to crawl a particular web page. Some examples of these suggestions are crawl rate, whether or not to index the page, whether or not to use the links on the page as votes in favor of the linked pages etc. Some search engines however do not follow these instructions.

Example:

Example:

<html>

<head>

<title>...</title>

<META NAME="ROBOTS" CONTENT="NOINDEX, NOFOLLOW">

</head>

<META NAME="ROBOTS" CONTENT="NOINDEX, FOLLOW">

<META NAME="ROBOTS" CONTENT="INDEX, NOFOLLOW">

<META NAME="ROBOTS" CONTENT="NOINDEX, NOFOLLOW">

Meta Search Engine

A meta search engine is not same as a traditional search engine like Google, and uses the search index of other engines. Meta search engines usually rank results based on the average ranking position of the various engines they query to get the search results. A couple of examples of meta search engines are Dogpile and Scour Search.

Meta Tags

Meta tags are HTML codes which contain information about the web page, but are not visible to the viewer or visitor. These tags are useful for web browsers, search engines and other such user agents. Meta tags are included in the “head” section of a web page. Some examples are meta description, meta keywords, meta author and meta redirect tags.

Example:

<head>

<meta name="description" content="Free Web tutorials" />

<meta name="keywords" content="HTML,CSS,XML,JavaScript" />

<meta name="author" content="Hege Refsnes" />

<meta http-equiv="content-type" content="text/html;charset=UTF-8" />

</head>

Example:

<head>

<meta name="description" content="Free Web tutorials" />

<meta name="keywords" content="HTML,CSS,XML,JavaScript" />

<meta name="author" content="Hege Refsnes" />

<meta http-equiv="content-type" content="text/html;charset=UTF-8" />

</head>

Search Engine Optimization (SEO) Beginners Guide Part 1

4:46 AM

Google, Guide, SEO, SEO Beginners Guide, Tips

Search Engine Optimization or SEO is something each blogger and website owner should be familiar with.

While there are several things about SEO which are not essential from a functional standpoint, you should be familiar with the basic terms associated with it. So, I’m going to write a series of 4 articles dealing with the basic explanation of common terms related to Search Engine Optimization. To begin with, Search Engine Optimization refers to the continuous process of modifying and supplementing a website, blog or a single web page with additional information so that one or more search engines find it valuable and place it high on search results pages.

Algorithm

An Algorithm, in the context of SEO refers to the mathematical model a Search Engine uses to sort and rank the web pages in a defined order of relevance to a particular search term or terms. This model or algorithm includes quantities like keyword density, number of inbound links to the page etc.

Algorithmic Results

The results displayed by a search engine after processing a search term or query and fetching the most relevant web pages from its index based on its algorithm. This is different from the paid ads displayed alongside the normal search results.

Alt Tag/Alt Text

Alt tag or alt text is the text displayed either before an image gets loaded on a web page or when the image can’t be loaded. Also, alt tags act as the anchor text of image links, and images are usually displayed in search results based on their alt text.

Analytics

In the context of SEO, analytics refers to mathematical or statistical facts about the particular website or webpage like number of inbound and outbound links, keyword densities, search impressions etc.

Anchor Text

Anchor text refers to the text which is linked to another web address or URL, using a hyperlink (or simply a link). Anchor text is the most important part of a link, and affects the search engine ranking of the linked page for the related searches.

Backlink

A backlink is a link to one’s own webpage. It is also referred to as an inbound link. Backlinks are most often considered the primary factor in determining the search ranking of a page. Google uses the PageRank algorithm to evaluate the quality and number of backlinks to a particular webpage.

Banned

Banned sites or blacklisted sites are those which have been de-indexed from one or more search engines due to unethical SEO practices, illegal content ownership etc. Banned sites have the choice of requesting inclusion in the search engine results again. These sites will receive zero traffic from search engines during the period of being blacklisted.

Black Hat SEO

All unethical SEO practices involving deception of search engine users or robots are referred to as black hat SEO. The term is widely used and includes spamming of other web pages with links to one’s own page(s), cloaking of content such as using the same color for excessive keywords as the background so that only the search engine robots can detect the additional content or even presenting alternate web pages with additional keywords to search engine robots, buying or selling links just for SEO etc. Most search engines either penalize or de-index sites which they discover to be using black hat SEO practices.

Blacklisted

Meaning the same as banned sites, blacklisted sites are those which have been removed from the index of one or more search engines due to engaging in unethical or black hat SEO activities.

Broken Link

Broken links or dead links are those which lead to a non-existent page. This may be either because the linked page has been blocked or deleted for some reason, or because there is an error in the link URL, say http://www.dragonblogger..com instead of http://www.dragonblogger.com! Search engines are known to penalize websites with a lot of broken links since such websites are not user-friendly at all!

Click-Through Rate (CTR)

Often used to refer to the effectiveness or earning potential of advertisements on web pages, CTR or click through rate is also an essential part of SEO analysis. In this context, it refers to the percentage of search engine users who click through your web page’s listing to your web page from the search engine results page.

Cloaking

Cloaking in the context of SEO is usually the practice of serving different web pages to different user agents; say one version of the page for search engines and another version for normal visitors. Now, cloaking is also used for displaying different information for users from different geographic locations, especially if the website is PHP based. The former type of cloaking is considered to be a form of black hat SEO while the latter is usually not.

Conversion

Conversion in the SEO parlance is usually the process of turning a search engine user into a visitor to the webpage or landing page and then into a buyer or subscriber. The term is freely used for all of these processes or one of them. The search engine user may either come to the web page via normal search listings or paid listings.

Conversion Analytics

While analytics typically includes all activity on the webpages and websites as a whole, conversion analytics specifically refers to the statistics of conversion of traffic into buyers and/or subscribers. It includes the source of traffic (paid listings or free listings), the percentage of visitors who complete the desired activity (conversion) and the approximate return on total investment, in case the listing is a paid one.

Conversion Rate

Conversion rate is the percentage of visitors reaching the landing page who complete the process of buying and/or subscribing, thus reaching the intended goal of the website owner. Conversion rate varies based on the quality of the landing page, the source of traffic and the particular topic or niche which the page is about.

Crawler

The most essential part of any search engine, a crawler, spider, robot or simply bot is the script used to collect data from webpages across the World Wide Web or WWW for creating an index which is used to list results for relevant search queries.

Delisted

Delisted URLs or websites are the same as de-indexed or blacklisted URLs and have been either permanently or temporarily removed from search engine results due to unethical SEO practices.

Description Tag

Description or meta description tags include HTML text that describes what the webpage is about. Search engines often use these meta description tags to give the user a glimpse of the webpages in the results. In recent times, Google and other search engines have started neglecting these tags in favor of the actual content of the pages. Early search engines were referred to as meta search engines since they relied solely on the meta description tags to sort search results.

Directory

Directories are indices of websites and blogs based on their category, popularity and or age. Functionally similar to search engine indices, these directories are manually compiled and edited from time to time. Very often, to save time, people looking for websites and blogs on particular topics choose to use directories rather than search engines, since manual compilation ensures that the linked sites are highly relevant and authentic, while search engines use algorithms which can be worked around.

Doorway Page

A doorway page is a web page designed specifically to attract large volumes of traffic from search engine listings or paid listings and then directs the visitors to another website or landing page. Doorway pages are typically low on useful content and highly optimized for search engines, with lots of keywords and inbound links. Search engines most often blacklist these doorway pages when discovered.

Dynamic Content

Most often, regular sites have static pages with the same content which may be updated manually. However, certain pages such as search engine result pages or SERPs display different information based on the input from the user, and are referred to as dynamic web pages. These pages are sometimes stored as static pages for future reference. Search engines often ignore dynamic web pages.

Flash Optimization

Flash content is a form of vector based graphical content, which is used for creating attractive designs on web pages. Some webpages are based entirely on flash content. Since many search engines are incapable of indexing/interpreting flash content, additional text must be supplied so as to inform the search engine crawlers about the content of these pages.

Subscribe to:

Posts (Atom)

.jpg)

.jpg)

-ahmedabad-webs.jpg)